-

Advocacy Theme

-

Tags

- Abortion

- Adoption

- Caregiving

- CEDAW

- Disability

- Domestic Violence

- Domestic Workers

- Harassment

- Healthcare

- Housing

- International/Regional Work

- Maintenance

- Media

- Migrant Spouses

- Migrant Workers

- Muslim Law

- National budget

- Parental Leave

- Parenthood

- Polygamy

- Population

- Race and religion

- Sexual Violence

- Sexuality Education

- Single Parents

- Social Support

- Sterilisation

- Women's Charter

Image-based sexual abuse featured in 1 in 2 cases of technology-facilitated sexual violence seen by AWARE in 2021

April 20th, 2022 | Gender-based Violence, News, Press Release, TFSV

This post was originally published as a press release on 20 April 2022.

* Correction notice, 6 Dec 2022: When our analysis was performed in early 2022, our system had not captured the full range of TFSV cases seen by SACC. This error affected our 2019, 2020 and 2021 TFSV data. We have since amended all three posts accordingly. We sincerely apologise for the errors.

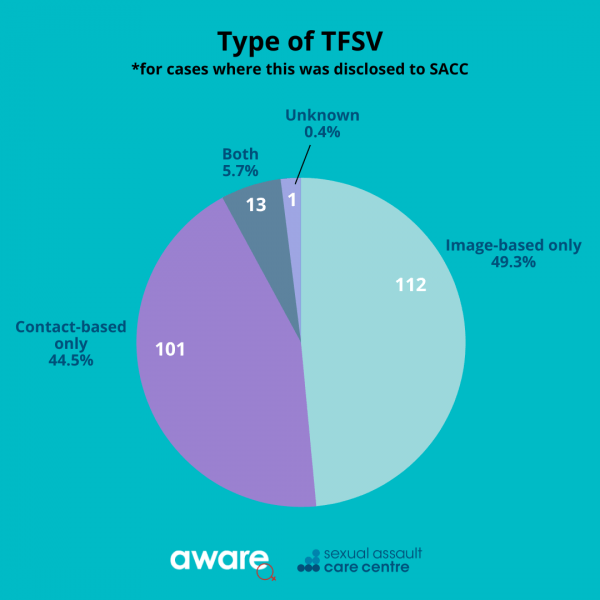

Half of the cases of technology-facilitated sexual violence seen by AWARE’s Sexual Assault Care Centre (SACC) in 2021 involved image-based sexual abuse (IBSA).

The gender-equality group today released its annual analysis of technology-facilitated sexual violence (TFSV) cases.

TFSV is unwanted sexual behaviour carried out via digital technology, such as digital cameras, social media, messaging platforms and dating and ride-hailing apps. IBSA, meanwhile, is an umbrella term for various behaviours involving sexual, nude or intimate images or videos of another person. AWARE identifies five types of IBSA: the non-consensual creation or obtainment of sexual images (including sexual voyeurism); the non-consensual distribution of sexual images (including so-called “revenge porn”); the forced viewing of sexual images (including dick pics); sextortion; and others.

In total, AWARE’s SACC saw 227 new cases of TFSV in 2021—an increase from 2020 (in which SACC saw 191 new TFSV cases). TFSV cases made up more than 1 in 4 (27%) cases at SACC in 2021. SACC saw an overall decrease in new cases last year (856 new cases), after an all-time high in 2020 (967 new cases) led the centre to modify its service model to more efficiently manage cases via triaging and referral.

“The pace at which sexual violence evolves and adapts to new technologies, platforms and social contexts makes it hard for researchers and support service-providers alike to keep up,” said Shailey Hingorani, AWARE’s Head of Research and Advocacy. “Who would have guessed, even a decade ago, that image-based sexual abuse would be both so diversified and so ubiquitous? We have a long and confounding journey ahead of us, fighting this.”

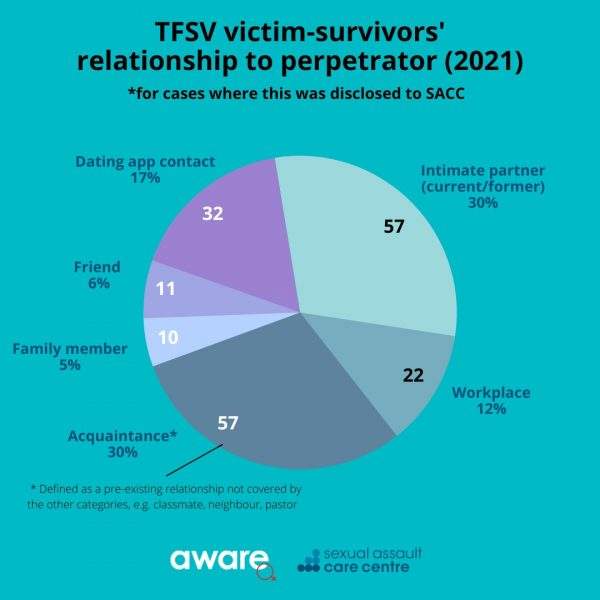

Of the 227 TFSV cases in 2021, the perpetrator was someone known to the survivor in 189 cases (the remaining 38 comprising cases involving strangers or cases in which perpetrator identity was not disclosed to SACC). The highest reported categories of perpetrators in 2021 were intimate partners, current or former (57 cases, or 30% of cases where perpetrator identity was disclosed); as well as acquaintances* (57 cases, or 30%), followed by dating app contacts (32 cases, or 17%). Other categories of perpetrator included family members, friends and contacts from the workplace.

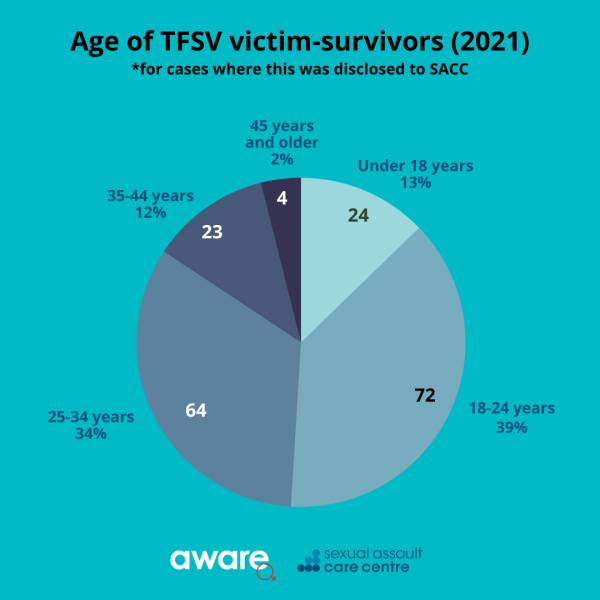

Where the age of the victim-survivor was disclosed to SACC, the highest number of cases fell into the 18-24 years age group in 2021 (39%), followed by the 25-34 years group (34%), under-18 years (13%) and 35-44 years (12%). A small percentage of victims were above 45.

Almost a quarter of cases in 2021 took place via messaging platforms such as Telegram and WhatsApp, an increase in percentage from previous years. Other online spaces where TFSV took place include social media, video streaming sites, porn sites and online forums.

“We are not surprised that perpetrators find messaging to be an attractive medium for TFSV, given that the end-to-end encryption made possible on messaging platforms prevents law enforcement, and the platforms themselves, from viewing the content of messages,” noted Ms Hingorani. “Advocates have been sounding the alarm recently about how direct messages, or DMs, facilitate online abuse. The Center for Countering Digital Hate, for example, released its ‘Hidden Hate’ report on Instagram DMs in early April.”

Few TFSV clients known to SACC sought assistance from the platforms, i.e. by making reports and requesting the removal of images or the suspension of offending accounts. Reasons for the low reporting rate are unclear, though this is in line with observations in previous years.

On a positive note, Ms Hingorani cited recent initiatives to address TFSV, such as the Alliance for Action on tackling online harms, which recently released a survey on the issue, as well as the victim support centre for online harms that will be set up by Singapore Her Empowerment (SHE), a new organisation announced earlier this month.

“We have been glad to see a rise in efforts to address technology-facilitated sexual violence in Singapore,” she added. “We are particularly impressed by the leadership shown by the government to introduce new codes of practice requiring platforms to put in place systems to ensure a safer online environment. Although many big technology companies have community standards to moderate content on their sites, these new codes of practice will hopefully streamline their obligations such that a minimum level of safety is assured on all platforms. Ideally, the new codes of practice will apply to a broad range of companies, not just big tech companies—as has been the regulatory approach in some other jurisdictions.”

* “Acquaintance” is defined as a pre-existing relationship not covered by the other categories. Examples from 2021 include classmate, neighbour, pastor, landlord and social media follower.

Infographics

See previous information on TFSV at SACC here.

Annex I: Definitions

Technology-facilitated sexual violence is unwanted sexual behaviour carried out via digital technology, such as digital cameras, social media and messaging platforms, and dating and ride-hailing apps. While all TFSV cases involve an aspect of technology, the abuse sometimes occurs in offline spaces too, and can take the form of physical or verbal sexual harassment, rape, sexual assault, stalking, public humiliation or intimidation. TFSV behaviours range from explicit sexual messages and calls, and coercive sex-based communications, to image-based sexual abuse.

Image-based sexual abuse is an umbrella term for various behaviours involving sexual, nude or intimate images or videos of another person. AWARE identifies five types:

- The non-consensual creation or obtainment of sexual images: including sexual voyeurism acts such as upskirting, hacking into a victim’s device to retrieve such images, and/or the creation of such images via deepfake technology

- The non-consensual distribution of sexual images: sometimes known colloquially as “revenge porn”, whereby images shared willingly by a partner or ex-partner are then disseminated to others without the subject’s consent

- The non-consensual viewing of sexual images: whereby a victim is made to view sexual content, such as pornography or dick pics, unwillingly, e.g. over message or email

- Sextortion: whereby sexual images of a victim, obtained with or without consent, are used as leverage to threaten or blackmail that victim, in order to solicit further images and/or sexual practices, money, goods or favours

- Others: including the capturing of publicly available, non-sexual images which are then non-consensually distributed in a sexualised context, e.g. with sexual comments and/or on a platform known for sexual content, such as the “SG Nasi Lemak” genre of Telegram group

Annex II: Selected Technology-Facilitated Sexual Violence Cases from 2021

Case A: The client found a hidden camera installed in the area where she worked. She discovered that it contained upskirt videos of herself and another colleague. She expressed concern about reporting this to her company or the police, as she felt uncomfortable about the prospect of other people viewing the videos, and worried about potential professional retaliation (e.g. if the company’s reputation came under fire).

Case B: The client met the perpetrator through an online dating app and agreed to get on a video call with him. During the call, the client suspected that the perpetrator might be impersonating someone as he looked different from photos on the dating app. Though the client was reluctant, the perpetrator insisted that the client undress on the video call, which the client eventually agreed to do. Upon hanging up, however, the perpetrator revealed that he had recorded the video call without the client’s knowledge or consent. He then blackmailed the client by threatening to share the video link on social media if the client did not immediately transfer a sum of money. The client managed to get the video taken down by reporting to the platform hosting it.

Case C: The client found out that her intimate videos, along with her name, had been leaked on multiple websites. She suspected that the perpetrator was her ex-partner, with whom she had consensually shared the videos during their relationship. Although one website took down the videos after she filed a police report, she was unable to remove them from other, international websites. The inclusion of her identity alongside the videos led to the client receiving an influx of follower requests from strangers over social media, which caused her distress. The police were ultimately not able to determine who uploaded the videos.

Case D: During an event hosted on Zoom, the client received sexually explicit chat messages from two participants via the private messaging function. Although she immediately informed the event organiser about the messages, no action was taken against the perpetrators. The client had to leave the event early to avoid further harassment.

Case E: The client found out that a family member of her ex-partner was impersonating her on social media. This family member had obtained the intimate photos and videos that she had shared with her ex-partner during their relationship. The perpetrator had disseminated these on a fake social media account as well as on WhatsApp. He also inititated sexual conversations with other men online in the guise of the client, and gave these men the client’s phone number. As a result, the client received many harassing calls and messages from strangers. She has filed a police report against the perpetrator.